Home › Forums › FABRIC General Questions and Discussion › L2 network between sites often have nodes that cannot reach one another

- This topic has 8 replies, 3 voices, and was last updated 3 years, 6 months ago by

Gregory Daues.

-

AuthorPosts

-

August 8, 2022 at 12:44 pm #2593

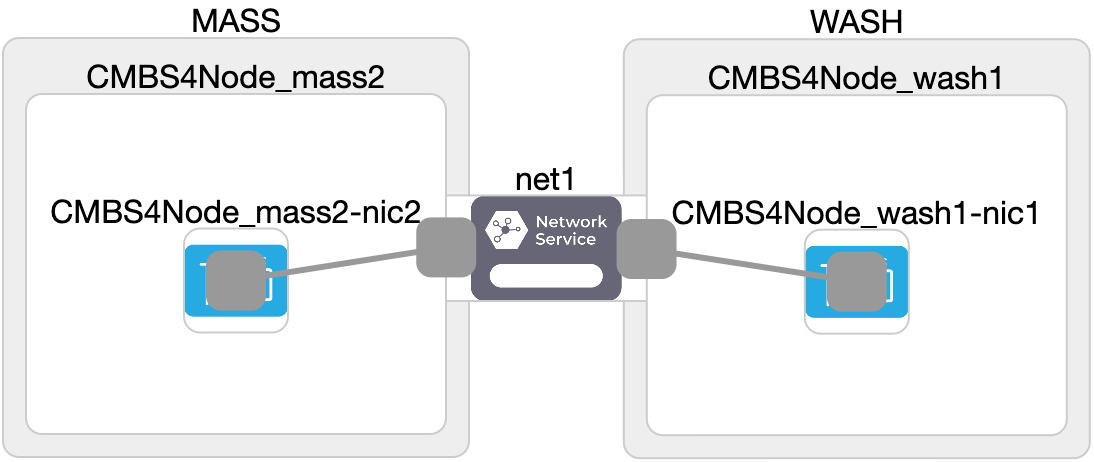

I have been creating L2 networks and adding IP numbers between a pair of FABRIC sites using the code snippets of

jupyter-examples/fabric_examples/fablib_api/create_l2network_wide_area

e.g.,

# Network

net1 = slice.add_l2network(name=network_name, interfaces=[iface1, iface2])I have observed that the two nodes on such a network can reach one another (ping, ssh) for a bit less than half of the Slices created, and that for a bit more than half of the Slices the nodes cannot reach one another . The addition of the IP numbers seems to have succeeded as each node can ping its own IP number.

[rocky@666fe764-bb4e-4302-b8af-1f1657809e2d-cmbs4node-star2 ~]$ ping 1235:5679::2

PING 1235:5679::2(1235:5679::2) 56 data bytes

64 bytes from 1235:5679::2: icmp_seq=1 ttl=64 time=0.040 ms

64 bytes from 1235:5679::2: icmp_seq=2 ttl=64 time=0.014 ms

64 bytes from 1235:5679::2: icmp_seq=3 ttl=64 time=0.009 ms

64 bytes from 1235:5679::2: icmp_seq=4 ttl=64 time=0.008 ms

[rocky@666fe764-bb4e-4302-b8af-1f1657809e2d-cmbs4node-star2 ~]$ ping 1235:5679::1

PING 1235:5679::1(1235:5679::1) 56 data bytes

From 1235:5679::2: icmp_seq=1 Destination unreachable: Address unreachable

From 1235:5679::2: icmp_seq=2 Destination unreachable: Address unreachable

From 1235:5679::2: icmp_seq=3 Destination unreachable: Address unreachable

(ping -6 gives the same.)

Just from the samples of my attempts it seems that the success/failure may depend on the pair of sites. e.g.,

NCSA-STAR has failed twice, SALT-UTAH has succeeded twice, but that a particular site does not necessarily point to failure, as NCSA-MICH succeeded, DALL-STAR succeeded.Any ideas on how to dig into the sporadic behavior? Would this be some kind of firewall/security group issue — would this be visible in cloud-init logging on a node, or is such firewall/security handled in FABRIC services?

Greg

August 9, 2022 at 10:08 am #2594I having difficulty reproducing the previous failures, so perhaps something has been fixed up (?) I’ll continue to check for issues.

August 10, 2022 at 2:35 pm #2616I suspect this was an intermittent issue with some underlying infrastructure. Or possibly a link that was slow to be instantiated. It might be if you wait a few minutes the link will become active.

If you see this again can you respond to this forum thread and include your slice ID? If the right developers are available they can look at the underlying code/infrastructure and see if this is a bug.

Also, let us know if you figure out how to consistently recreate it.

Paul

-

This reply was modified 3 years, 6 months ago by

Paul Ruth.

August 10, 2022 at 2:39 pm #2617Yes I saw this failure maybe 7 times over a stretch of 2-3 days last week, but it has not arisen just recently. I will monitor if I can get an as-it-happens example.

August 12, 2022 at 10:55 am #2636I was able to reproduce a case where the nodes in the Slice with the L2 network cannot reach one another. The Slice I just made this morning is MySliceAug12A

“ip addr list eth1” on the nodes shows respectively

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 02:df:f2:32:8d:ad brd ff:ff:ff:ff:ff:ff

inet6 1244:5679::1/64 scope global

valid_lft forever preferred_lft forever

3: eth1: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 02:4b:b8:69:ef:db brd ff:ff:ff:ff:ff:ff

inet6 1244:5679::2/64 scope global

valid_lft forever preferred_lft forever

but on “node1”

[rocky@75af8f33-0a4f-4941-824c-4a010d40f20f-cmbs4node-wash1 ~]$ ping 1244:5679::1

PING 1244:5679::1(1244:5679::1) 56 data bytes

64 bytes from 1244:5679::1: icmp_seq=1 ttl=64 time=0.028 ms

64 bytes from 1244:5679::1: icmp_seq=2 ttl=64 time=0.007 ms

64 bytes from 1244:5679::1: icmp_seq=3 ttl=64 time=0.005 ms

64 bytes from 1244:5679::1: icmp_seq=4 ttl=64 time=0.005 ms

^C

— 1244:5679::1 ping statistics —

4 packets transmitted, 4 received, 0% packet loss, time 3089ms

rtt min/avg/max/mdev = 0.005/0.011/0.028/0.010 ms

[rocky@75af8f33-0a4f-4941-824c-4a010d40f20f-cmbs4node-wash1 ~]$ ping 1244:5679::2

PING 1244:5679::2(1244:5679::2) 56 data bytes

From 1244:5679::1: icmp_seq=1 Destination unreachable: Address unreachable

From 1244:5679::1: icmp_seq=2 Destination unreachable: Address unreachable

From 1244:5679::1: icmp_seq=3 Destination unreachable: Address unreachable

^C

— 1244:5679::2 ping statistics —

6 packets transmitted, 0 received, +3 errors, 100% packet loss, time 5105ms

pipe 3

[rocky@75af8f33-0a4f-4941-824c-4a010d40f20f-cmbs4node-wash1 ~]$ curl -v telnet://[1244:5679::2]:22

* Rebuilt URL to: telnet://[1244:5679::2]:22/

* Trying 1244:5679::2…

* TCP_NODELAY set

* connect to 1244:5679::2 port 22 failed: No route to host

* Failed to connect to 1244:5679::2 port 22: No route to host

* Closing connection 0

curl: (7) Failed to connect to 1244:5679::2 port 22: No route to host

[rocky@75af8f33-0a4f-4941-824c-4a010d40f20f-cmbs4node-wash1 ~]$ curl -6 -v telnet://[1244:5679::2]:22

* Rebuilt URL to: telnet://[1244:5679::2]:22/

* Trying 1244:5679::2…

* TCP_NODELAY set

* connect to 1244:5679::2 port 22 failed: No route to host

* Failed to connect to 1244:5679::2 port 22: No route to host

* Closing connection 0

curl: (7) Failed to connect to 1244:5679::2 port 22: No route to host

Greg

August 12, 2022 at 12:51 pm #2638Is this slice still up? I’m seeing if someone can look at it.

August 12, 2022 at 1:54 pm #2639Yes, that slice MySliceAug12A is still up; there is another active slice MySliceAug12B which did not exhibit the issue (different sites). Project is “CMB-S4 Phase one”.

August 12, 2022 at 3:15 pm #2640Greg, could you please delete this slice and recreate it?

We had some leftover layer3 connections from testing which were causing the issue. We were able to identify and clear them. It should work now. Please let us know if you still face this issue.

August 12, 2022 at 3:40 pm #2641I have created a new slice MySliceAug12C with the same attributes and the issue has not occurred; the nodes /ports are reachable. I’ll watch for if it ever occurs again, but it looks like it should be resolved !

-

This reply was modified 3 years, 6 months ago by

-

AuthorPosts

- You must be logged in to reply to this topic.