Home › Forums › FABRIC General Questions and Discussion › Performance Drop on ConnectX-6 After Release 1.9

- This topic has 6 replies, 2 voices, and was last updated 4 months, 3 weeks ago by

Rasman Mubtasim Swargo.

-

AuthorPosts

-

September 24, 2025 at 1:07 am #9029

Hello,

I have been using ConnectX-6 SmartNICs for my research on high-speed data transfers. Before release 1.9, I was consistently getting around 20 Gbps throughput when running

iperf3directly over SSH (not inside Docker).However, since upgrading to release 1.9, I am now only seeing a maximum of about 5 Gbps with the same setup and configuration.

For context:

- I am using the iperf3 optimized SmartNIC notebook provided by FABRIC.

- I run my experiments outside of Docker, after the site creation, using VSCode SSH connections.

- The only change in my workflow was moving from the previous release to release 1.9.

- For my research, I require 20–25 Gbps bandwidth between nodes located at two separate sites.

Any insights into possible causes and potential mitigation plans would be greatly appreciated.

Thanks,

Rasman

-

This topic was modified 4 months, 3 weeks ago by

Rasman Mubtasim Swargo.

-

This topic was modified 4 months, 3 weeks ago by

Rasman Mubtasim Swargo.

September 24, 2025 at 9:49 am #9032Hi Rasman,

By default, the standard iperf3 version does not perform well with multiple streams. ESnet provides a patched version that resolves this issue and delivers significantly better performance. This fixed iperf3 is already packaged inside the container.

If you would like to run it directly on the host, you can install it with the following steps:

curl -L https://github.com/esnet/iperf/releases/download/3.18/iperf-3.18.tar.gz > iperf-3.18.tar.gz tar -zxvf iperf-3.18.tar.gz cd iperf-3.18 sudo apt update sudo apt install build-essential sudo ./configure make sudo make installAdditionally, please make sure that the script

node_tools/host_tune.sh(included with the notebook) has been executed on the relevant nodes.If you continue to see lower bandwidth, kindly share your slice ID so I can take a closer look.

Thanks,

KomalSeptember 24, 2025 at 3:00 pm #9039Slice ID: 25c5b6c2-f0f8-4cc9-b4e1-cad570231aca

One thing I forgot to mention is the execution often gets stuck in slice submission cell. Like, post boot config of one node is usually done but the other gets stuck. It gets stuck at this point, FIU’s node does not deliver the ‘done!’ message:

Time to StableOK 246 seconds Running post_boot_config ... Running post boot config threads ... Post boot config Node-GATECH, Done! (16 sec)

Here’s the code:

sites = ['GATECH', 'FIU'] print(f"Sites: {sites}") node1_name = 'Node1' node2_name = 'Node2' cores=8 ram=64 disk=1000 image='default_ubuntu_20' slice_name = 'iPerf3-tuned-nic-x6-64gb-1tb-GF-2' nic_name = 'nic1' model_name = 'NIC_ConnectX_6' network_name='net1'from ipaddress import ip_address, IPv4Address, IPv6Address, IPv4Network, IPv6Network subnet = IPv4Network("192.168.1.0/24") available_ips = list(subnet)[1:] #Create Slice slice = fablib.new_slice(name=slice_name)net1 = slice.add_l2network(name=network_name, subnet=subnet) for s in sites: # Node1 node1 = slice.add_node(name=f"Node-{s}", cores=cores, ram=ram, disk=disk, site=s, image=image) iface1 = node1.add_component(model=model_name, name=nic_name).get_interfaces()[0] node1.add_component(model='NVME_P4510', name='nvme1') iface1.set_mode('auto') net1.add_interface(iface1) net1.set_bandwidth(50) node1.add_post_boot_upload_directory('node_tools','.') node1.add_post_boot_execute('sudo node_tools/host_tune.sh') # node1.add_post_boot_execute('node_tools/enable_docker.sh {{ _self_.image }} ') # node1.add_post_boot_execute('docker pull fabrictestbed/slice-vm-ubuntu20-network-tools:0.0.1 ') #Submit Slice Request slice.submit();I have to stop the execution and move to the next cell. I’ll report here what I get after running the esnet iperf3. Let me know if you need anything to investigate this issue.

-

This reply was modified 4 months, 3 weeks ago by

Rasman Mubtasim Swargo.

September 24, 2025 at 3:08 pm #9041Your provided snippet gets stuck at the ‘make’ command in both of the nodes:

ubuntu@Node-GATECH:~/iperf-3.18$ make Making all in src make[1]: Entering directory '/home/ubuntu/iperf-3.18/src' make all-am make[2]: Entering directory '/home/ubuntu/iperf-3.18/src' CC iperf3-main.o main.c:212:1: fatal error: opening dependency file .deps/iperf3-main.Tpo: Permission denied 212 | } | ^ compilation terminated. make[2]: *** [Makefile:974: iperf3-main.o] Error 1 make[2]: Leaving directory '/home/ubuntu/iperf-3.18/src' make[1]: *** [Makefile:733: all] Error 2 make[1]: Leaving directory '/home/ubuntu/iperf-3.18/src' make: *** [Makefile:404: all-recursive] Error 1

September 24, 2025 at 5:41 pm #9042Hi Rasman,

I forgot to mention that the steps for installing iperf3 should be run as the root user. On your VM, I did the following:

sudo su - curl -L https://github.com/esnet/iperf/releases/download/3.18/iperf-3.18.tar.gz > iperf-3.18.tar.gz tar -zxvf iperf-3.18.tar.gz cd iperf-3.18 sudo apt update sudo apt install build-essential sudo ./configure; make; make install sudo ldconfigI also applied the following host tuning (

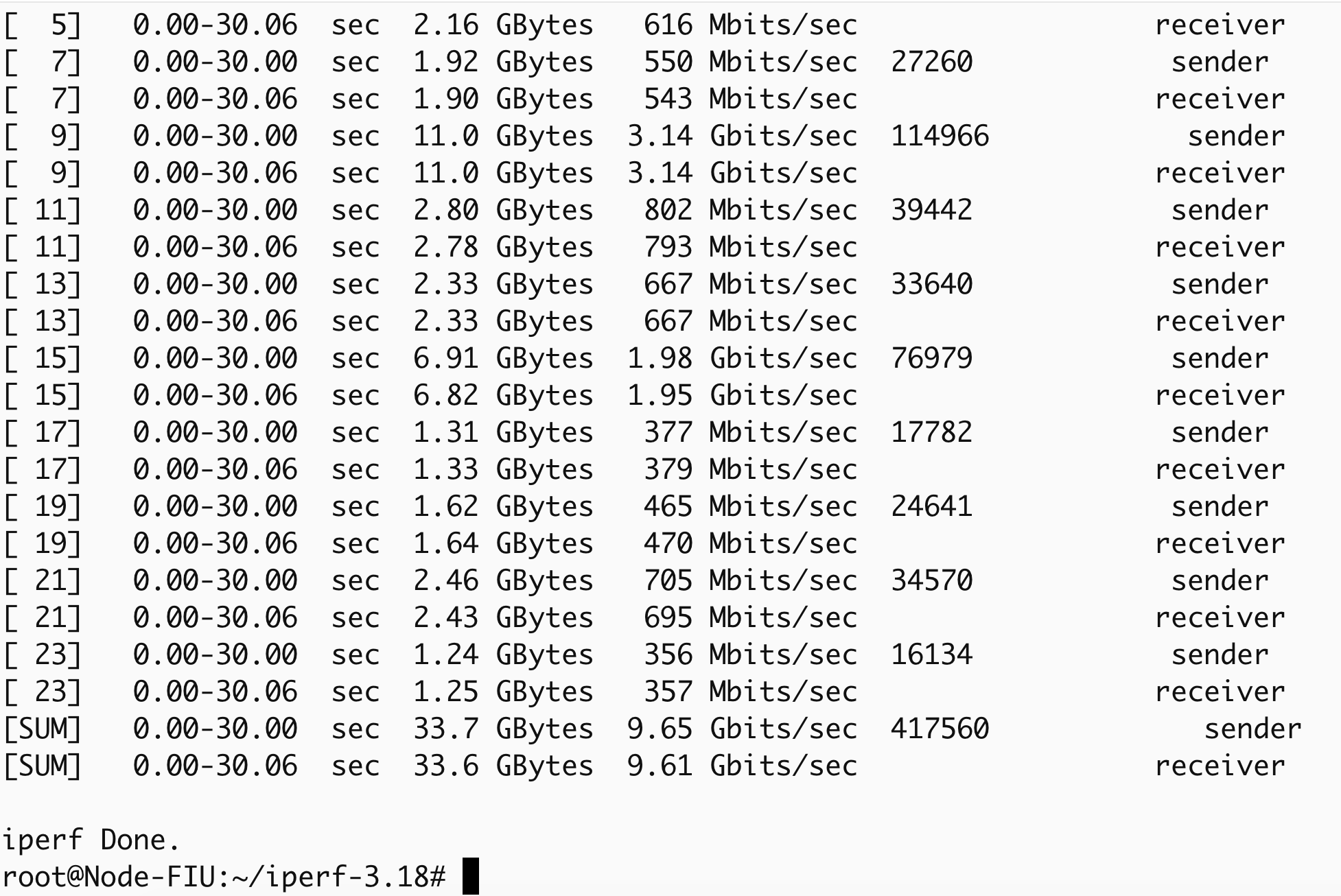

node_tools/host_tune.sh) on both VMs:#!/bin/bash # Linux host tuning from https://fasterdata.es.net/host-tuning/linux/ cat >> /etc/sysctl.conf <<EOL # allow testing with buffers up to 128MB net.core.rmem_max = 536870912 net.core.wmem_max = 536870912 # increase Linux autotuning TCP buffer limit to 64MB net.ipv4.tcp_rmem = 4096 87380 536870912 net.ipv4.tcp_wmem = 4096 65536 536870912 # recommended default congestion control is htcp or bbr net.ipv4.tcp_congestion_control = bbr # recommended for hosts with jumbo frames enabled net.ipv4.tcp_mtu_probing = 1 # recommended to enable 'fair queueing' net.core.default_qdisc = fq #net.core.default_qdisc = fq_codel EOL sysctl --system # Turn on jumbo frames for dev inbasename -a /sys/class/net/*; do ip link set dev $dev mtu 9000 doneWith these changes, I’m now seeing bandwidth close to 10G (see snapshot below).

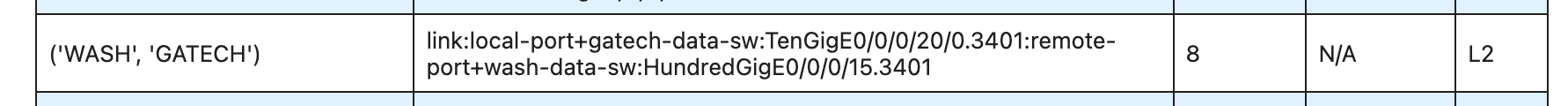

According to

fablib.list_links(), links from GATECH are capped at 8G. I’d suggest trying a different site instead of GATECH.Regarding the slice getting stuck at Submit: your keys may have expired. Please try running the notebook

jupyter-examples-rel1.9.0/configure_and_validate/configure_an_validate.ipynb. This should automatically renew your keys if needed.If it still hangs at submit, please check

/tmp/fablib/fablib.logfor errors and share here.Best,

Komal

September 24, 2025 at 6:29 pm #9046I have booked a slice ( d6065a22-c893-425f-b12f-3bc0fe4d2481 ) with NEWY and CERN nodes which are listed as 320Gbps. This time, it did not get stuck. Everything went smoothly. I am still getting around 3 Gbps.

Could you please have a look?

I saw that there is another 8 Gbps line listed for (NewY, CERN). Can you guide me on how to pick sites so that I can get the fastest network speed?(‘NEWY’, ‘CERN’) link:local-port+cern-data-sw:FourHundredGigE0/0/0/26.3733:remote-port+newy-data-sw:FourHundredGigE0/0/0/60.3733 320 N/A L2 September 24, 2025 at 8:57 pm #9047It worked after manually doing the steps you described. Thanks.

-

AuthorPosts

- You must be logged in to reply to this topic.