Home › Forums › FABRIC General Questions and Discussion › slice active but node no longer accessible

- This topic has 28 replies, 4 voices, and was last updated 4 years, 3 months ago by

Fengping Hu.

-

AuthorPosts

-

November 15, 2021 at 5:10 pm #1055

@Fengping we are still trying to figure out the problem. Can you use other sites in the meantime (Utah?) while keeping this slice for now – we don’t want to hold up your work in the meantime.

November 15, 2021 at 5:14 pm #1056Hi Ilya, Thanks for letting me know. I’m spinning one up in NCSA. I will let you know if I see the same problem at NCSA.

November 15, 2021 at 5:23 pm #1058That works too. Do definitely let us know. These are early hiccups, we obviously expect to have fewer of those as we go.

November 16, 2021 at 8:50 pm #1089It looks I am seeing the same problem at NCSA. My slice there also lost contact after 1 day despite lease extensions. We will try to improve deployment automations while these hiccups are being addressed:)

~$ ping 141.142.140.44

PING 141.142.140.44 (141.142.140.44) 56(84) bytes of data.

From 141.142.140.44 icmp_seq=1 Destination Host UnreachableNovember 18, 2021 at 1:49 pm #1099Just to bring this up – we are going to test what happens to long-lived slices. Right now it isn’t clear if this is somehow related to your extending the slice lifetime or something happening inside the slice that cuts off access.

November 23, 2021 at 2:44 am #1125I checked one of your VMs on FABRIC-MAX.

Name: ff5acfa1-bbff-44a0-bf28-3d7d2f038d1f-Node1

IP: 63.239.135.79In your workflow to configure slice, you change the network settings that affect Management Network.

[root@node1 ~]# systemctl status NetworkManager

● NetworkManager.service – Network Manager

Loaded: loaded (/usr/lib/systemd/system/NetworkManager.service; disabled; ve>

Active: inactive (dead)

Docs: man:NetworkManager(8)

[root@node1 ~]# systemctl is-enabled NetworkManager

disabledInterface eth0 should persist its IP address configuration (from RFC1918 subnet). Network node of the virtualization platform control external traffic either by NAT’ing or routing against the configured IP address. Currently you have the following:

[root@node1 ~]# ifconfig -a

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:86:f0:f7:a8 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eth0: flags=4098<BROADCAST,MULTICAST> mtu 9000

ether fa:16:3e:49:8e:5a txqueuelen 1000 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 16 bytes 916 (916.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 16 bytes 916 (916.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0When network settings is reverted to the original for Management Network, your VM shows the following:

[root@node1 ~]# ifconfig -a

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:69:1f:14:22 txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 9000

inet 10.20.4.94 netmask 255.255.255.0 broadcast 10.20.4.255

inet6 fe80::f816:3eff:fe49:8e5a prefixlen 64 scopeid 0x20 ether fa:16:3e:49:8e:5a txqueuelen 1000 (Ethernet)

RX packets 2015 bytes 232936 (227.4 KiB)

RX errors 0 dropped 31 overruns 0 frame 0

TX packets 1978 bytes 226617 (221.3 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10

loop txqueuelen 1000 (Local Loopback)

RX packets 1160 bytes 58116 (56.7 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 1160 bytes 58116 (56.7 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0and it’s reachable back again.

$ ping 63.239.135.79 -c 3

PING 63.239.135.79 (63.239.135.79): 56 data bytes

64 bytes from 63.239.135.79: icmp_seq=0 ttl=52 time=23.257 ms

64 bytes from 63.239.135.79: icmp_seq=1 ttl=52 time=21.347 ms

64 bytes from 63.239.135.79: icmp_seq=2 ttl=52 time=17.025 ms— 63.239.135.79 ping statistics —

3 packets transmitted, 3 packets received, 0.0% packet loss

round-trip min/avg/max/stddev = 17.025/20.543/23.257/2.607 msYou need to review standard installation procedures of platforms such as Docker, Kubernetes, OpenStack and consider changes for the Management Network of your slivers.

November 23, 2021 at 11:01 am #1128Hi Mert,

Thanks for looking into this for us. So somehow the vm is restarted and lost the network configurations. We will make changes to let eth0 be managed by the NetworkManager so it can survive a reboot.

But it looks not just the configuration is lost, also a network interface disappeared. The vm is created with a second interface eth1. But that interface no longer exist. We need the second interface to form a cluster.

[centos@node1 ~]$ sudo ip link show

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN mode DEFAULT group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 9000 qdisc fq_codel state UP mode DEFAULT group default qlen 1000

link/ether fa:16:3e:49:8e:5a brd ff:ff:ff:ff:ff:ff

3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN mode DEFAULT group default

link/ether 02:42:69:1f:14:22 brd ff:ff:ff:ff:ff:ff

[centos@node1 ~]$Any idea on how to address the second issue?

Thanks,

Fengping

November 23, 2021 at 11:32 am #1129I cannot comment on that without diving into the logs to see how the VM was created, PCIe device attached etc. Instead, I suggest starting a new slices (including the fixes for management network), then step by step checking the status with respect to the requested devices. I will be able to help if you prefer this approach.

November 23, 2021 at 12:51 pm #1130Hi Mert,

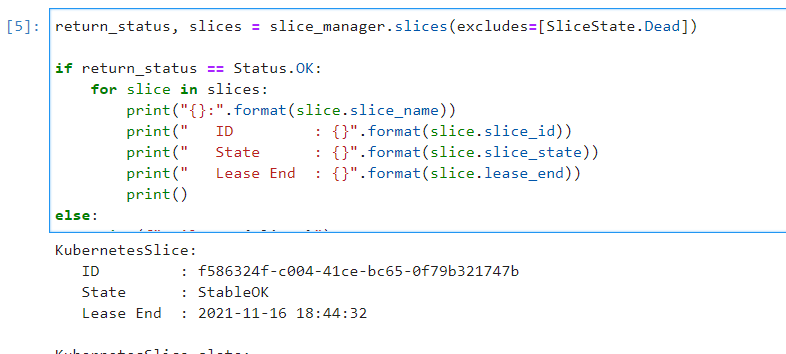

I’ve created a new slice: KubernetesSlice1 at site MAX. Management ip for node1 is 63.239.135.80. I also extended the lease for it.

The NetworkManager is enabled with eth1 and calico interfaces excluded.

Would you be able to check how this slice look. The big question is if it can stay like that after 1 day without losing it’s network interface etc.

Thanks,

Fengping

November 23, 2021 at 1:17 pm #1131It seems the added nic can’t survive a reboot.

I rebooted node2 in that slice. I can still login to it via the management ip which is good. Also the nvme device is still in the node. The problem is eth1 is gone after reboot.

November 23, 2021 at 1:20 pm #1132I don’t know that we tested reboots. I’m more surprised that NVME drive survived the reboot, than that the NIC did not. We will add ‘modify’ capability that should allow reattaching devices – this is on the roadmap.

November 23, 2021 at 1:42 pm #1133Thanks for the clarification about reboot.

We don’t really have a need to reboot the vms. But I believe the vms in a slice will be rebooted after one day — guessed from the fact that we lose contact to vms after 1 day. With the Networkmanager fix, we will still be able to access the vms via management ip but the vms in slice can no longer form a cluster without the eth1.

So the big question for now: Is there a way to avoid vm getting rebooted during the slice lease period. Especially for those with attached devices.

November 23, 2021 at 1:44 pm #1134There is nothing known in the logic of our software that requires the VM to be rebooted at 24 hours. We can test to make sure it doesn’t happen – we didn’t observe it in our recent testing related to your questions.

November 23, 2021 at 2:14 pm #1135That make sense. I think it’s the dhcp ip lease time that got expired when the networkmanager was disabled. I think we should be good now. Thanks!

-

AuthorPosts

- You must be logged in to reply to this topic.