Forum Replies Created

-

AuthorPosts

-

November 4, 2025 at 3:30 pm in reply to: Unable to allocate IP addresses to nodes – “No Management IP” #9147

Hi Geoff,

This appears to be a bug in fablib. As a workaround, could you please modify the call as follows?

client_interface = client_node.get_interface(network_name="client-net", refresh=True)This change should prevent the error from occurring. I’ll work on fixing this issue in fablib.

Best,

KomalHi Tanay,

BlueField-3 nodes are now available on FABRIC, and we currently offer two variants:

- ConnectX-7-100 – 100 G

- ConnectX-7-400 – 400 G

To provision and use them, your project lead will need to request access through the Portal under Experiment → Project → Request Permissions.

Best,

Komal1 user thanked author for this post.

November 4, 2025 at 3:10 pm in reply to: Unable to allocate IP addresses to nodes – “No Management IP” #9144Hi Geoff,

Just to confirm my understanding — your slice is in StableOK state, and the nodes display IP addresses as shown in your screenshot, but

node.executeis failing with a “no management IP” error. Is that correct?Could you please share your Slice ID here?

Thanks,

KomalThank you, @yoursunny, for sharing these observations and the detailed steps to reproduce them. This appears to be a bug. I’ll work on addressing it and will update you once the patch is deployed.

Best,Komal1 user thanked author for this post.

Hi Jiri,

We’ve been investigating two issues related to your recent observation:

- Slice reaches StableOK, but management IPs don’t appear – This behavior seems to be caused by performance degradation in our backend graph database. We’re actively working to address and mitigate this issue.

- Slice stuck in “doing post Boot Config” – This issue was traced to one of the bastion hosts. A fix for this has been applied earlier today.

If your slice is still active, could you please share the Slice ID where you observed this behavior? Additionally, if you encounter this issue again, it would be very helpful if you could send us the log file located at /tmp/fablib/fablib.log. This information will help us investigate and debug the issue more effectively.

Best regards,

Komal

Hi Fatih,

You are absolutely correct — in the FABRIC testbed, the term “host” refers to a single physical machine, not a group of blades or multiple servers.

Regarding your question about the core count:

The host you mentioned (for example,seat-w2) reports 128 CPUs because the physical server has two AMD EPYC processors, each with 32 physical cores and hyperthreading — enabled. This means each physical core presents two logical CPUs (threads) to the operating system.So, the breakdown is:

- 2 sockets × 32 physical cores per socket = 64 physical cores

- With hyperthreading (2 threads per core): 64 × 2 = 128 logical CPUs

Inside a VM, you’ll typically see the processor model name (e.g., AMD EPYC 7543 32-Core Processor), which corresponds to the physical CPU model installed in the host. The number of vCPUs visible in the VM depends on the resources allocated to it by the hypervisor, not the total physical core count of the host.

In summary:

- Host = one physical machine

- 128 cores = 64 physical cores × 2 threads (hyperthreading)

- VM CPU info = underlying processor model, showing only allocated vCPUs

You can find more details about the hardware configurations for a FABRIC site here:

https://learn.fabric-testbed.net/knowledge-base/fabric-site-hardware-configurations/Best regards,

KomalHi Fatih,

When requesting a VM in a slice, specifying the

hostparameter (for example,seat-w2.fabric-testbed.net) ensures that the VM is provisioned on that particular physical host. If multiple VMs in the same slice specify the same host (e.g.,seat-w2.fabric-testbed.net), they will all be co-located on that same physical machine.This can be done as follows:

slice.add_node(name="node1", host="seat-w2.fabric-testbed.net", ...) slice.add_node(name="node2", host="seat-w2.fabric-testbed.net", ...)If the

hostparameter is not specified, the FABRIC Orchestrator automatically places the VMs across available hosts based on resource availability, which may result in them being distributed across different physical machines.If the requested host cannot accommodate the VMs (due to limited capacity or resource constraints), the system will return an “Insufficient resources” error.

Best regards,

KomalOctober 10, 2025 at 9:44 am in reply to: Slice Creation Error&Guidance on Network Service Choice for Multi-Sit #9091Hello Yuanhao,

Thank you for the detailed description of your experiment and topology — that’s very helpful.

For your described setup with multiple independent, point-to-point internal links between your own nodes across sites, using L2STS is perfectly valid and appropriate. L2STS provides private Layer-2 connectivity between nodes within your slice and is generally recommended when you want internal point-to-point links without stitching to external networks.

L2PTP, on the other hand, is typically used when:

- You are connecting Dedicated NICs,

- You need guaranteed QoS (bandwidth reservation), or

- You want to explicitly control the path (e.g., define intermediate hop sites).

Given that, your current L2STS-based slice (l25gclplus-yuanhao5) is indeed a good configuration for your 5G experiment.

Regarding the L2PTP error, you’re correct — the message indicates that the interface must be tagged with a VLAN ID. There appears to be a bug in the portal’s Slice Builder that currently prevents specifying VLAN tags for L2PTP connections. Thank you for helping us identify that and we will work to address it.

In the meantime, you can continue using L2STS, which should meet your needs for internal connectivity.

For additional context and examples, please refer to our Network Services documentation:

https://learn.fabric-testbed.net/knowledge-base/network-services-in-fabric/Also, I’d recommend checking out the example notebooks available under the JupyterHub tab on the FABRIC Portal — they provide working examples of various network configurations, including multi-site and point-to-point topologies.

Best regards,

Komal Thareja1 user thanked author for this post.

October 8, 2025 at 2:01 pm in reply to: Unable to find SSH commands for slice that is being provisioned #9082It prints the interfaces after the slice is completely up.

Could you please share a snippet of code or screenshot where you are observing this?

Best,

Komal

October 8, 2025 at 6:29 am in reply to: Unable to find SSH commands for slice that is being provisioned #9080Hi Sourya,

Could you please delete your slice and try creating it again?

Best,

Komal

This was resolved offline.

Issue here was downloading

.ccode from JH container to desktop results intxtfiles.Recommended solution: Create a tar file of the entire directory and download the directory.

Best,

Komal

October 7, 2025 at 11:06 pm in reply to: Maintenance Network AM – 10/07/2025 – 8:00 pm – 9:00 pm #9078Maintenance is complete and network model has been updated!

Hi Nirmala,

I tried an example as below and it seems to be working:

1st parameter is the local path where file should be saved and second parameter being the remote location of the file to be downloaded.

node.download_file('hello.c','/home/rocky/hello.c')Could you please share which version of fablib are you using?

Best,

Komal

Hi Rasman,

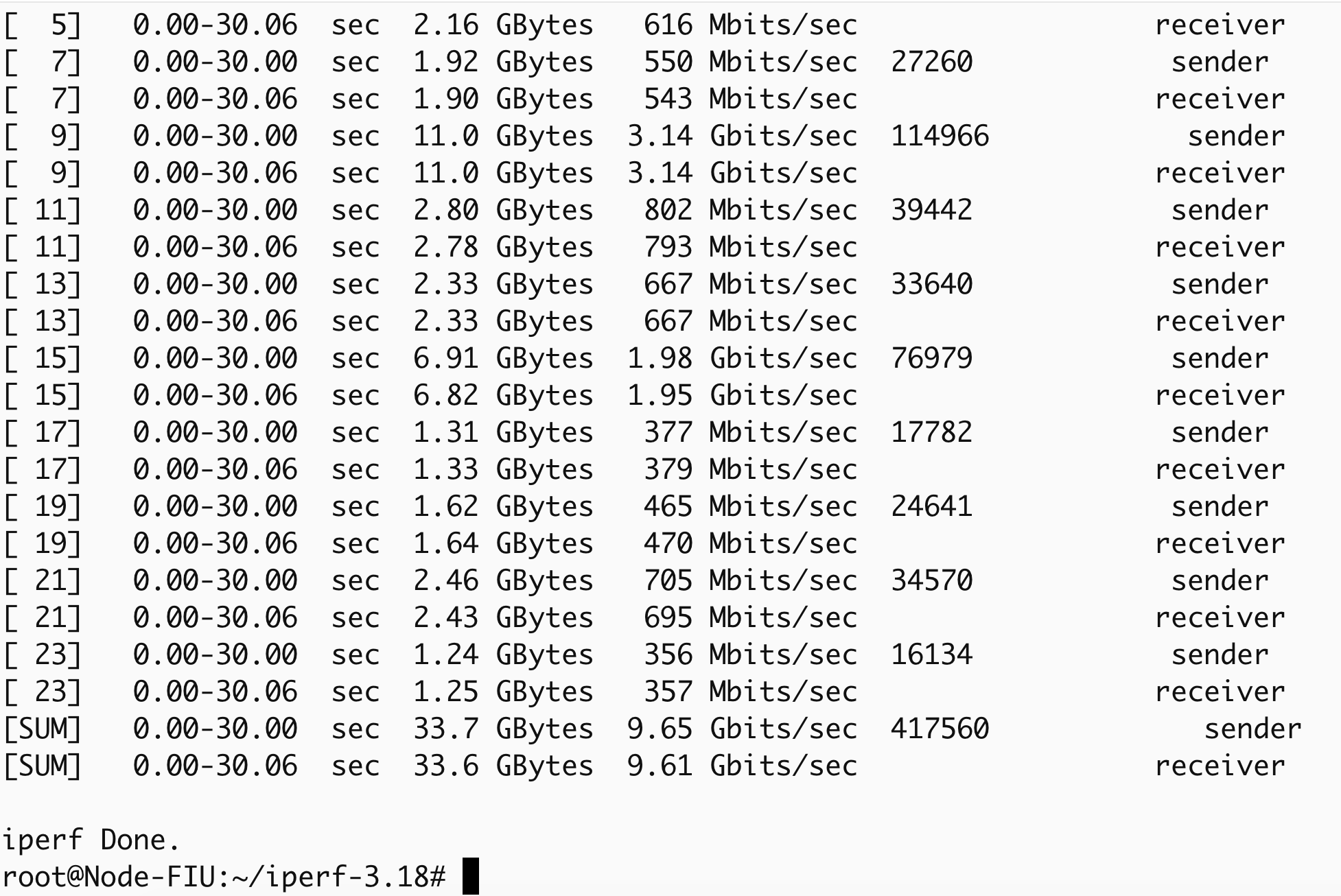

I forgot to mention that the steps for installing iperf3 should be run as the root user. On your VM, I did the following:

sudo su - curl -L https://github.com/esnet/iperf/releases/download/3.18/iperf-3.18.tar.gz > iperf-3.18.tar.gz tar -zxvf iperf-3.18.tar.gz cd iperf-3.18 sudo apt update sudo apt install build-essential sudo ./configure; make; make install sudo ldconfigI also applied the following host tuning (

node_tools/host_tune.sh) on both VMs:#!/bin/bash # Linux host tuning from https://fasterdata.es.net/host-tuning/linux/ cat >> /etc/sysctl.conf <<EOL # allow testing with buffers up to 128MB net.core.rmem_max = 536870912 net.core.wmem_max = 536870912 # increase Linux autotuning TCP buffer limit to 64MB net.ipv4.tcp_rmem = 4096 87380 536870912 net.ipv4.tcp_wmem = 4096 65536 536870912 # recommended default congestion control is htcp or bbr net.ipv4.tcp_congestion_control = bbr # recommended for hosts with jumbo frames enabled net.ipv4.tcp_mtu_probing = 1 # recommended to enable 'fair queueing' net.core.default_qdisc = fq #net.core.default_qdisc = fq_codel EOL sysctl --system # Turn on jumbo frames for dev inbasename -a /sys/class/net/*; do ip link set dev $dev mtu 9000 doneWith these changes, I’m now seeing bandwidth close to 10G (see snapshot below).

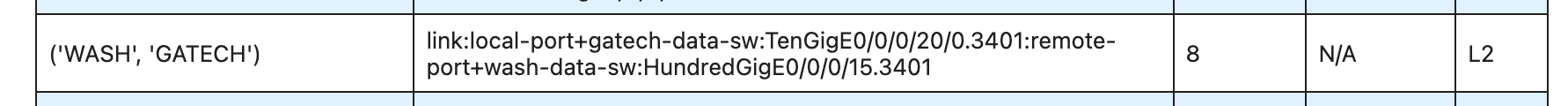

According to

fablib.list_links(), links from GATECH are capped at 8G. I’d suggest trying a different site instead of GATECH.Regarding the slice getting stuck at Submit: your keys may have expired. Please try running the notebook

jupyter-examples-rel1.9.0/configure_and_validate/configure_an_validate.ipynb. This should automatically renew your keys if needed.If it still hangs at submit, please check

/tmp/fablib/fablib.logfor errors and share here.Best,

Komal

Hi Rasman,

By default, the standard iperf3 version does not perform well with multiple streams. ESnet provides a patched version that resolves this issue and delivers significantly better performance. This fixed iperf3 is already packaged inside the container.

If you would like to run it directly on the host, you can install it with the following steps:

curl -L https://github.com/esnet/iperf/releases/download/3.18/iperf-3.18.tar.gz > iperf-3.18.tar.gz tar -zxvf iperf-3.18.tar.gz cd iperf-3.18 sudo apt update sudo apt install build-essential sudo ./configure make sudo make installAdditionally, please make sure that the script

node_tools/host_tune.sh(included with the notebook) has been executed on the relevant nodes.If you continue to see lower bandwidth, kindly share your slice ID so I can take a closer look.

Thanks,

Komal -

AuthorPosts