FABRIC provides a myriad of options for interconnecting the VMs within a slice (or more precisely the NIC/FPGA cards in the VMs). Because there are a wide range of options available, it may seem confusing at times how to interconnect VMs to get the desired topology. This brief article attempts to explain this topic.

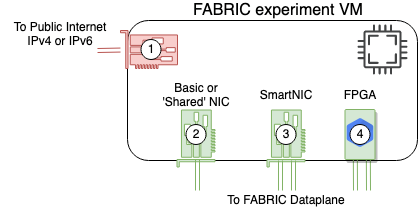

Shown in the figure below is a typical FABRIC VM with various NIC cards and links to either the FABRIC management network (used to login to VMs from the Internet) or to the FABRIC Dataplane network (used to carry experiment traffic between VMs). [Note: the FABRIC Dataplane network can be setup to peer with the public Internet, but this must be explicitly requested and is not discussed in this article.]

Management Interfaces

Each VM always comes with a single ‘management’ interface (number 1 in the figure). It will be assigned a single IPv6 (or IPv4) address at the time when the VM is booted. (The choice between an IPv4/6 address is controlled by the site — or more precisely, the hosting campus/facility where the site is located. The management interface serves two key roles: (1) it enables users to login to the VM, and (2) it allows the VM to connect out to the internet (say to download software). Incoming connections to the management interface are only allowed from the Bastion host (and only via ssh). You cannot ssh directly into the VM’s management interface. Once logged into a VM, users can create outgoing connections to the Internet to download data and software into the VM via the management interface. For the most part, outgoing connections are not restricted.

FABRIC Dataplane Interfaces

Traffic generated by an experiment must go across the FABRIC Dataplane. To send traffic across the FABRIC Dataplane, users must add network interfaces to their VMs that attach to the FABRIC Dataplane. FABRIC Dataplane interfaces come in several flavors described in the following table:

| Name | Typical quantity per site | Number of Ports in each NIC | Description |

| ‘Basic’ or ‘Shared’ NIC | 300+ | 1 | Virtual NICs implemented as SR-IOV (Single Root I/O Virtualization) on top of a single 100Gbps ConnectX-6 physical card. |

| ‘Smart’ NIC | 4-6 | 2 | Physical NICs directly made available to your VM. These come in two varieties: – ConnectX-5 10/25Gbps – typically 2-4 per site – ConnectX-6 100Gbps – typically 2 per site – ConnectX-7 100/400Gbps – typically 1 per site |

| FPGA | 1 | 2 | U280 Alveo FPGAs |

Each of these networking components can be connected to other networking components in other VMs in your slice using one of the FABRIC Network services:

- Layer 2 services like Bridges, L2PTP, L2STS

- Layer 3 services like FABNetv4, FABNetv6

- Special services like Port Mirroring

All this dataplane connectivity is configured using either a Slice Builder inside the FABRIC Portal or using Python FABlib code in Jupyter Notebooks.